CRS CTO, Ali Jelveh launches initiative to democratize AI development through trust-based funding.

Overview

The Open Intelligence Foundation represents a new approach to supporting AI innovation. Founded by Ali Jelveh, CTO at CRS Credit API, the foundation addresses a critical question: how do we build AI in the right way?

We fundamentally believe AI should be created in the open, similar to our most functional and useful operating systems like Linux. The foundation provides rapid, no-strings-attached funding to independent AI builders, researchers, and tinkerers who are shaping the future without backing from big labs or venture capital firms.

Today, we’re proud to announce our first grantee: Prince Canuma, known in the AI community as “The MLX King.”

Despite how busy things are at CRS, we’re proud that Ali has made the time to launch this initiative. The future of AI belongs to everyone, not just the few.

The Foundation’s Mission

The Open Intelligence Foundation operates on principles that distinguish it from traditional funding mechanisms:

- Trust Over Bureaucracy: We believe in the honor system. No complex reporting requirements, no equity demands, no board seats.

- Speed Matters: Days, not months, from application to funding. We move as fast as builders do.

- 100% to Builders: All volunteer-run with zero fees. Every dollar goes directly to funding independent work.

- Global by Design: Intelligence has no borders. We fund builders anywhere in the world.

- Up to $10,000 per grant: Larger amounts available for select projects that demonstrate exceptional potential.

The foundation exists to empower builders who are creating the tools, libraries, and research that will shape tomorrow’s intelligence landscape. Whether they need GPUs for training, compute credits for experiments, or simply support to keep building, we’re here to help them move faster.

Meet Our First Grantee: Prince Canuma

Prince Canuma exemplifies exactly the type of builder this foundation was created to support.

His journey started in Mozambique, where he developed a passion for technology despite limited local infrastructure. Recognizing that software offered the ability to collaborate globally, Prince moved to India in 2017, spending five years immersed in the tech community. There, he won multiple hackathons and built expertise that would later prove invaluable.

By late 2023, Prince saw an opportunity that would change the trajectory of on-device AI. Apple had released MLX, a machine learning framework optimized for Apple Silicon. While it showed promise, the infrastructure to run large language models locally simply didn’t exist. Running AI efficiently was still confined to the cloud.

Prince set out to change that.

Building the Infrastructure for Local AI

Over the past two years, Prince has created the essential infrastructure that makes AI accessible on Mac, iPhone, and iPad:

MLX-VLM (1,800+ GitHub stars, 204 forks): Vision Language Models for Apple Silicon, enabling multi-image understanding, video processing, and fine-tuning with LoRA/QLoRA. It includes FastAPI server and Gradio UI, supports models like Qwen2-VL, Idefics3, LLaVa, and Pixtral, and has attracted 50+ contributors. The project powers features in LM Studio and enables same-day conversions of newly released models.

MLX-Audio (2,900+ GitHub stars, 232 forks): A comprehensive Text-to-Speech, Speech-to-Text, and Speech-to-Speech library that’s 35% faster than PyTorch. It features real-time streaming, voice customization, and iOS/macOS Swift integration (supporting iOS 16.0+ and macOS 14.0+). The library includes advanced models like Marvis-TTS and Kokoro.

MLX-Embeddings (222+ GitHub stars): Vision and language embedding models for local inference, supporting XLM-RoBERTa, BERT, ModernBERT, SigLIP, and ColPali for multimodal retrieval applications.

Impact & Recognition

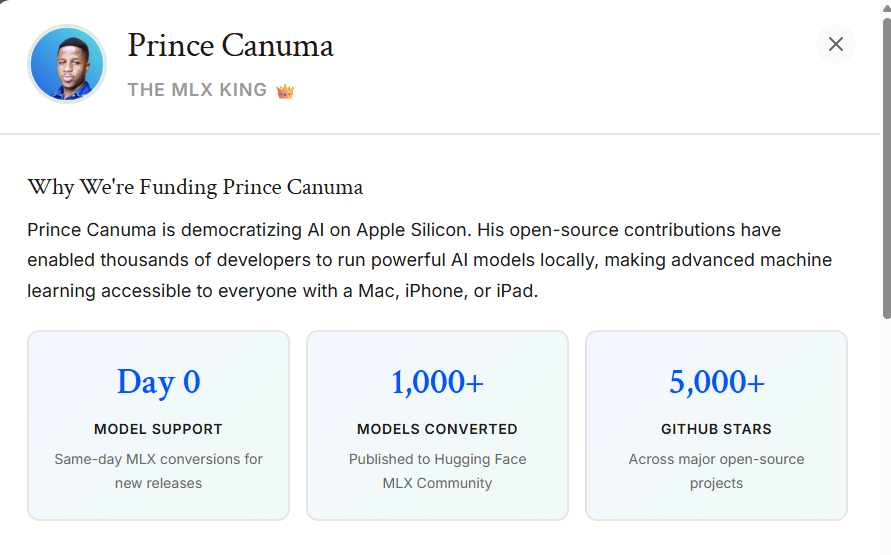

Across all his projects, Prince has achieved remarkable metrics:

| Metric | Achievement |

| Models Converted | 1,000+ published to Hugging Face MLX Community |

| GitHub Stars | 5,000+ across major open-source projects |

| Model Support | Day-zero conversions for every new release |

| Industry Adoption | Powers features in LM Studio |

| Collaborations | DeepMind, Liquid AI, Baidu, Technology Innovation Institute |

The impact has been extraordinary. Companies like DeepMind, Liquid AI, Baidu, and LM Studio have reached out to collaborate. The Technology Innovation Institute in the Middle East (creators of the Falcon models) connected with Prince after discovering their models could run at 300 tokens per second on MLX, performance they hadn’t achieved with their own infrastructure.

Prince’s contributions go beyond just creating libraries. He’s built comprehensive production-ready tools including FastAPI servers, Gradio UIs, CLI tools, and Swift integrations that make MLX accessible to developers at every level. His performance optimization work achieved 35% faster TTS inference compared to PyTorch through efficient quantization and optimization techniques.

Perhaps most remarkably, Prince and three other contributors beat Microsoft’s own team by 100x on the BitNet model, a large language model designed to run on binary numbers. “This is Microsoft, a multi-trillion dollar company,” Prince notes. “They released the paper, they released the model, but we have systems that run much faster using their own models.”

His work has been recognized by the broader AI community as well. As noted on the TWIML AI Podcast: “Prince shares his journey to becoming one of the most prolific contributors to Apple’s MLX ecosystem, having published over 1,000 models and libraries that make open, multimodal AI accessible and performant on Apple devices.”

Why We’re Funding Prince

In early 2024, Prince left his research engineer position to work on MLX full-time, allowing him to contribute more to the open source ecosystem. The community rallied around him, with contributors helping fund an M3 Ultra Mac, demonstrating the collaborative spirit that makes open source powerful.

To expand MLX’s capabilities to support NVIDIA GPUs, test more complex models, and build ambitious new projects like his AI-powered robot, Prince needed dedicated hardware. When he posted on X that a GPU would help accelerate his work, Ali Jelveh reached out directly.

Within weeks, Prince had the GPU he needed. The impact has been immediate and significant.

“Since I got it, my productivity has increased dramatically. I can do way more models, way more complicated things,” Prince explains. “Now I have a machine here that can do the work efficiently.”

This is how funding should work for independent builders: identify talented people doing meaningful work, provide what they need to accelerate, and get out of the way.

What’s Next for Prince

With foundation support, Prince is already expanding his impact:

Company Launch: He’s preparing to start a company that will bring MLX to enterprises, offering a more efficient and cost-effective alternative to traditional NVIDIA-based infrastructure. “Instead of a company having to buy servers of NVIDIA and hire people with my expertise, like just one of me would cost $300,000, the initial investment cost goes down significantly when you go with MLX.”

NVIDIA Support: MLX now supports CUDA, and with his new GPU, Prince is building infrastructure for NVIDIA users to run these systems as efficiently as they run on Apple Silicon.

Continued Open Source Work: Prince remains committed to open source contributions, continuing to convert models on day zero of their release and collaborating with major AI labs.

Robotics Integration: He’s building a robot powered entirely by MLX, with voice generation running locally, a demonstration of how far local AI has come.

A Model for the Future

Prince Canuma’s story illustrates why the Open Intelligence Foundation exists. The future of AI shouldn’t be determined solely by those with access to massive compute budgets or venture capital. It should be shaped by brilliant, dedicated builders who care about making AI accessible to everyone.

“Open source opens up all of these doors,” Prince reflects. “My goal is to not only help the community but also these companies that are model providers, making sure that their open source efforts are being valued and people can actually take advantage of them.”

When we fund Prince, we’re not just buying him a GPU. We’re investing in a vision where:

- A developer in any country can run powerful AI models on hardware they already own

- Small teams can compete with tech giants on performance

- Open source collaboration drives innovation faster than closed systems

- The best ideas win, regardless of who funds them

This is what open intelligence looks like.

Get Involved

Prince Canuma is our first grantee, but he won’t be our last. We’re actively seeking independent AI builders, researchers, and tinkerers who need support to keep building.

Are you building something meaningful in AI? Apply for funding at openintelligencefoundation.ai/request-funding

Up to $10,000 per grant; larger amounts available for select projects

Know someone who should be funded? Nominate them at openintelligencefoundation.ai/nominate

Want to support this mission? Become a sponsor at openintelligencefoundation.ai/become-sponsor

All contributions go directly to builders. Zero fees, all volunteer-run.

Connect with Prince:

- GitHub: @Blaizzy

- X: @Prince_Canuma

- Hugging Face: prince-canuma

- LinkedIn: prince-canuma

Learn More:

- Open Intelligence Foundation: openintelligencefoundation.ai

- Ali Jelveh on X: @jelveh

- CRS Credit API: crscreditapi.com

The Open Intelligence Foundation is founded by CRS Credit API and Ali Jelveh, CTO. We provide rapid, no-strings-attached funding to independent AI builders worldwide.